The dark side of social media and advocates’ efforts to combat it

Social media’s dark side and advocates’ efforts to combat it

Concerned parents file hundreds of lawsuits against Meta and TikTok related to harmful content.

Content Warning: The following content discusses topics related to mental health challenges, including self-harm, body image issues and eating disorders.

Scrolling and posting on social media apps used to be fun for Delia McDade Clay.

But in recent months, McDade Clay noticed that her Instagram habits left her feeling negative, worsening her mental health and self-worth.

As a busy Loyola University Chicago grad student and Division I cross country and track runner, McDade Clay said that she was ready to log off social media and focus on being present. On March 1, she went on Instagram to alert her following of more than 2,000 users that she would be taking a break from social media.

“While it started out as fun, posting on Instagram has become a toxic cycle of putting the most desirable aspects of my life on display for validation during the very low moments,” McDade Clay said in her Instagram story.

McDade Clay’s struggles with Instagram are personal, but it’s an experience many young people face on social media. Over the last two decades, there has been a rise in adolescent depression and suicidal behavior that coincides with the emergence of social media, according to the National Insitute of Health.

“Concerns have risen around the effects of social media on adolescents’ mental health due to decreased face-to-face interpersonal interactions, addiction-like behaviors, online bullying and social pressure through increased comparison,” the National Institute of Health review said.

Currently, there are hundreds of lawsuits brought on by parents against social media giants such as Meta (Facebook and Instagram), TikTok, Snapchat, Twitter and YouTube for knowingly causing their children to become addicted to harmful content.

“Meta preys on our young people and has chosen to profit by knowingly targeting and exploiting their vulnerabilities,” Massachusetts Attorney General Andrea Joy Campbell said in a press release. “In doing so, Meta has significantly contributed to the ongoing mental health crisis among our children and teenagers.”

Some families in these lawsuits claim that the platforms don’t have adequate parental controls because children can easily bypass the age and identity verifications, according to Shouse Injury Law Group. They also argue that social media companies design their algorithms to maximize engagement at the expense of children’s mental health.

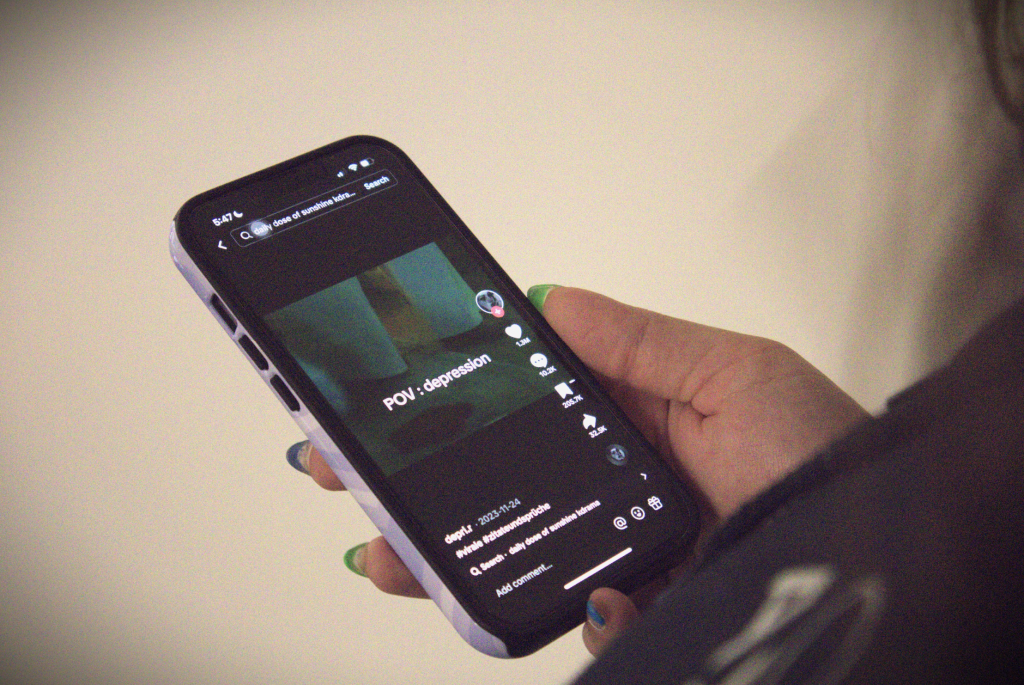

Social media platforms use recommendation algorithms to push posts that are similar to what users have watched or engaged with in the past. This causes a problem called the “filter bubble,” which is when someone is overexposed to a certain narrative or viewpoint, the PhD Program Director for the School of Information Studies at Syracuse University Bei Yu said.

“Machine learning algorithms can be trained to recognize harmful content,” Yu said. “Technical solutions exist and have been used by social media companies to remove harmful content. However, I don’t think they have worked hard enough to remove such content.”

Every social media platform has a unique algorithm based on machine learning and a set of factors referred to as ranking signals. The signals are used to rank the value of each piece of content for every individual user, according to GTECH.

TikTok’s platform is different than other social media because it’s designed to surface new content rather than show users content from users they follow. In 2021, The New York Times obtained an internal copy of a leaked TikTok document titled “TikTok Algo 101” that provides an overview of how the TikTok algorithm functions.

In the document, TikTok said the algorithm is based on factors such as likes, comments, captions, sounds and hashtags. TikTok also has the For You Page where content is specifically curated for each user based on their interactions within the app.

Algorithms can be designed to identify words and visuals that indicate harmful content, Yu explained. But one of the main challenges with moderating social media content is that posts have to be flagged manually by human workers.

Content related to depression, self-harm and eating disorders was reportedly detrimental to employee’s mental health, a California lawsuit claims. The working conditions of these jobs have been tied to post-traumatic stress disorder, anxiety, depression and alienation in moderators. The moderators often live in developing countries and get paid low wages.

“Facebook is ignoring its duty to provide a safe workplace and instead creating a revolving door of contractors who are irreparably traumatized by what they witnessed on the job,” a partner at the Law firm of Burns Charest LLP Korey Nelson said in a press release.

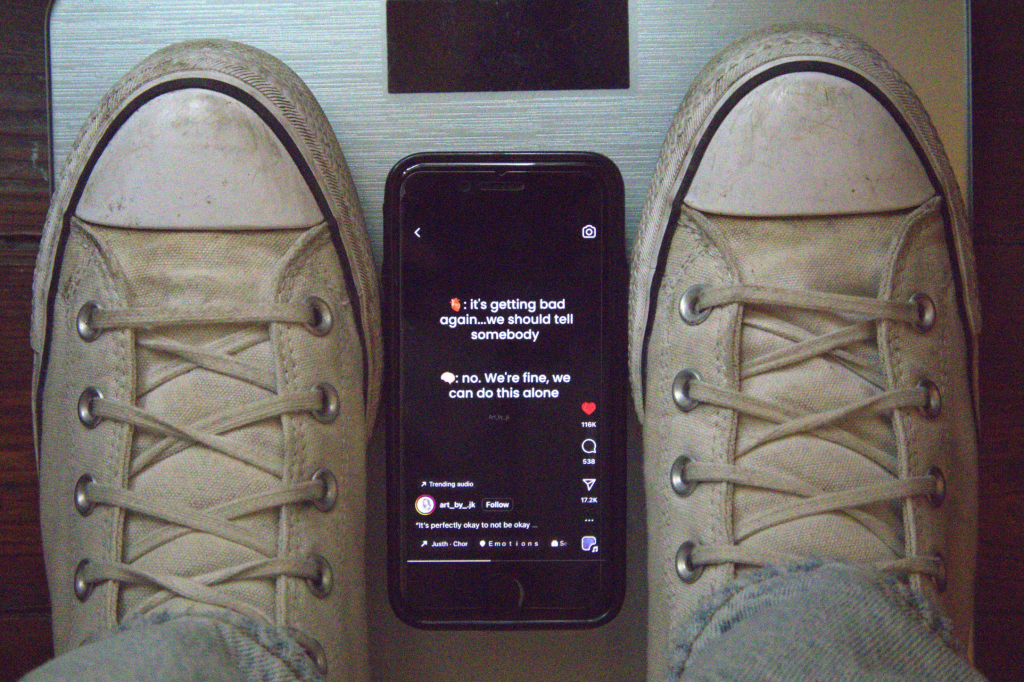

It’s also difficult for moderators to flag harmful content on social media because it floods young people’s feeds. In a study done by the Center for Countering Digital Hate, researchers created eight new TikTok accounts, setting their profile ages to 13 years old. They found that the new teen TikTok accounts recommended self-harm and eating disorder content within minutes of scrolling the app’s For You feed.

Specifically, the accounts recommended suicide content within two minutes and eating disorder content within eight minutes, according to the center’s study. TikTok showed teens’ body image and mental health content every 39 seconds on the For You pages.

The study also found that vulnerable populations who have a mental illness or struggle with body image issues are more likely to see harmful content on their feeds. TikTok accounts established with the phrase “lose weight” in their name received three times as many harmful videos and 12 times as many self-harm videos as standard teen accounts.

For people like Jason Wood, a national eating disorder advocate and author, social media can be a toxic space in his recovery journey from orthorexia. He suggests that people check in on themselves regularly when using social media because it has been damaging to his health at times.

“Social media helped fuel my eating disorder,” Wood said. “I was constantly subjected to images of shirtless men working out at the gym. It fueled comparison and made me feel bad about myself.”

Despite the dark aspects of social media, Wood decided to become an advocate in the space. Wood said that sharing his story of PTSD and orthorexia on social media has helped him recover and de-stigmatize eating disorders among men.

Today, Wood serves as the director of community engagement with the National Association of Anorexia Nervosa and Associated Disorders. He also authored a book called Starving for Survival, about his experiences with orthorexia, shedding light on the stigma around mental health issues like eating disorders among men.

On social media, Wood talks openly about his mental health struggles and the stigma surrounding men with eating disorders. His posts address eating disorder myths, mental health medication, resources and his personal struggles with orthorexia.

Many social media advocates in the mental health space share their stories to help open up conversations about difficult subjects, according to the National Alliance on Mental Health. To combat the harmful content flooding young people’s social media accounts, advocates work to de-stigmatize illnesses and provide resources for people who may be struggling.

Emma Lembke, a political science junior at Washington University in St. Louis, is a social media regulation advocate who created a non-profit called the Log Off Movement. At 12 years old, Lembke became entranced by social media after she created her first Instagram account. But the fun scrolling quickly turned dark as she began comparing herself to others and felt her mental health deteriorating.

“Sadly, I became heavily addicted to social media,” Lembke said. “I was on apps for five to six hours daily and would go down dangerous rabbit holes that eventually led me to disordered eating. Even though I was enduring these harms, I couldn’t get off.”

Once Lembke realized she was addicted to social media, she took a break from the platforms for a few months. During that time, she dove into research and literature about the dark side of social media. Soon enough, she realized that young people weren’t in the safe social media advocacy space, so she took it upon herself to change that.

Now, at 21 years old, Lembke works to hold media companies accountable and help young people build healthy relationships with online platforms. She does this through awareness campaigns, lobbying and speaker events.

An MIT professor led a recent mental health study on college campuses and found that social media may have contributed to 300,000 new cases of depression across the country. The Log Off movement is trying to address this issue through community engagement. They have chapters across different college campuses where students advocate for a more balanced relationship with social media versus its complete elimination.

The Log Off Movement also aims to solve the problems with social media’s addictive features, persuasive design and targeted content. The organization gives young people practical tips to promote healthy tech habits such as taking social media breaks and following healthy accounts.

Beyond individuals, tech companies are starting to build apps that foster “authenticity” and address issues of “isolation” on social media. For Ish Goel, the co-founder and CEO of Hunch, that meant creating an app that encourages users to ask questions in the form of a poll to meet similar people.

Goel said that his app is meant to foster meaningful connections. Unlike many other social media platforms, this app is anonymous and interactive so people may feel more inclined to express themselves freely. He also believes the app leads to greater spontaneity and authenticity because conversations disappear after 24 hours.

“The predominant focus of top social media apps is media consumption rather than authentic self-expression,” Goel said. This has resulted in heightened feelings of FOMO, body image insecurities and overwhelming exposure to information, including misinformation, all of which have severe impacts on mental health.”

Instead of waiting for change to come from large social media companies, advocates like Lembke, Wood and Goel are trying to change the landscape themselves. Despite the presence of harmful content on social media, more mental health conversations are happening across platforms.

“It can be a very toxic space,” Wood said. “I encourage people to ask themselves if they are using social media to connect or to compare.” If you are using it to compare, then it's time to step away and unfollow those accounts.”